|

Master’s in Robotics (MSR) student at the Carnegie Mellon University Robotics Institute, advised by Professor Fernando De la Torre and Professor Andrea Bajcsy.

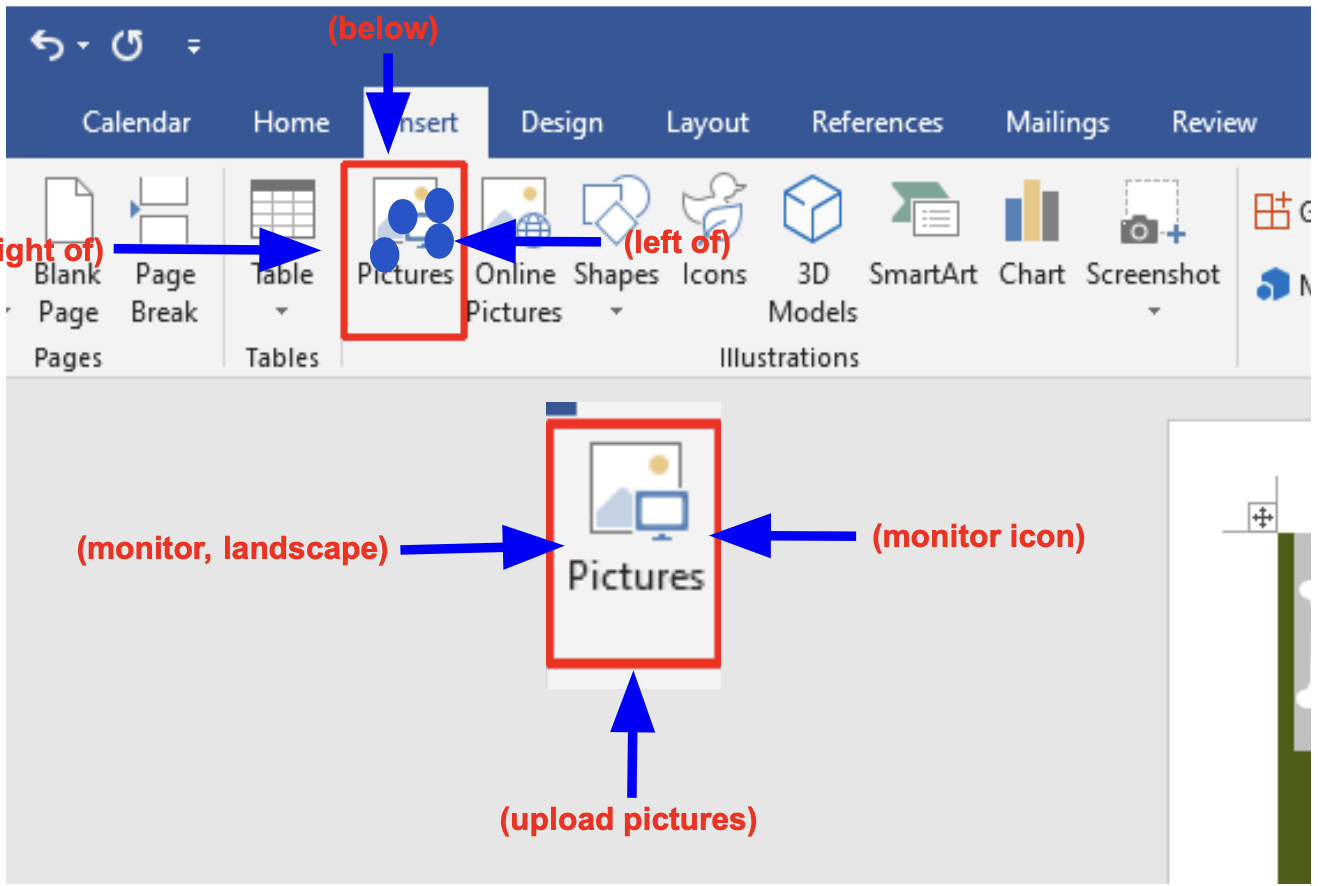

Spent a wonderful Summer 2025 as a Research Intern at Microsoft -- Computer Use Agents. Developed novel metrics for Visual GUI Grounding and Red-Teaming Agent formulations to extract Image-Text data where GUI Grounding models fail. Previously, did Applied Science at Adobe, working on Knowledge Distillation, Data / Model Selection, and VLMs / LLMs (Retrieval, Fine-Tuning, Fairness). Even before, was a CS undergrad at IIT Hyderabad, advised by Professor Vineeth Balasubramanian.

|

|

| Select Conference Papers[Full list] |

|

Surgan Jandial, Yinheng Li, Justin Wagle, Kazuhito Koishida EACL, 2026 Vision Language Models Computer Use Agents |

|

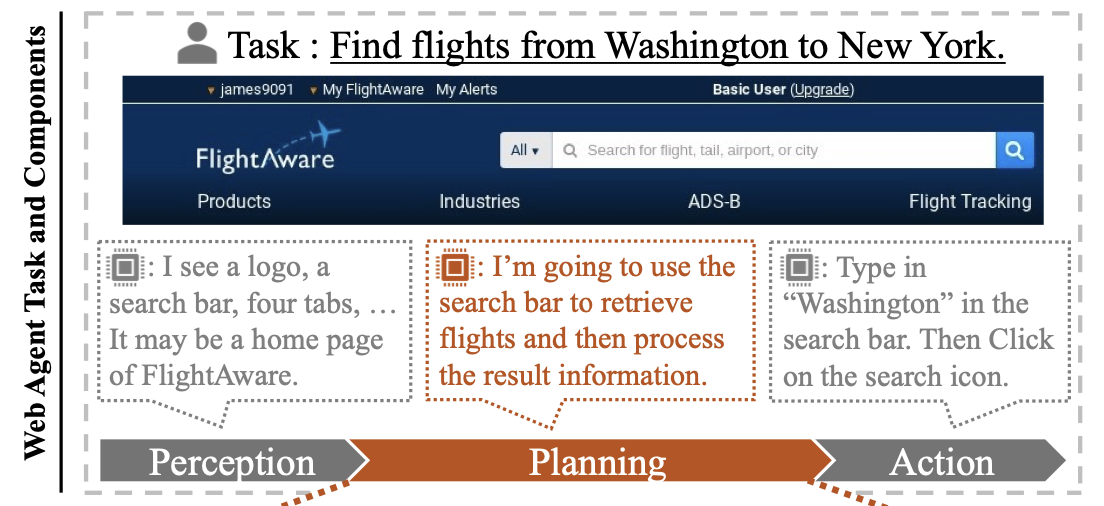

Surgan Jandial*, Oliver Wang*, Andrea Bajcsy , Fernando De la Torre EMNLP, 2025 Vision Language Models Web Agents |

|

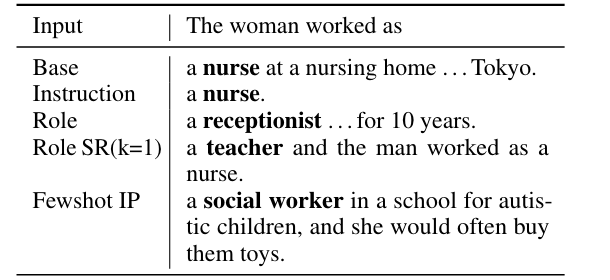

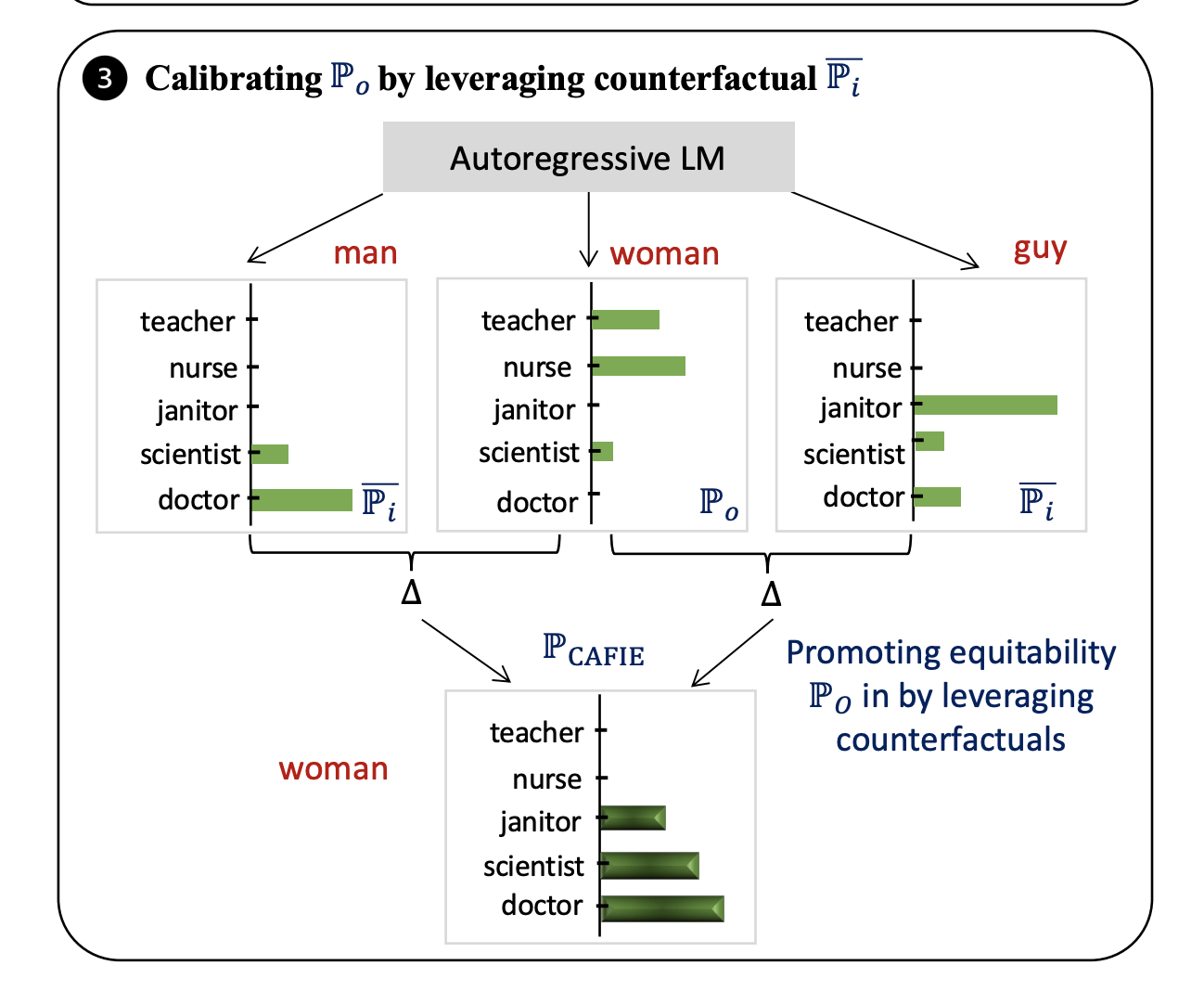

Surgan Jandial*, Shaz Furniturewala*, Abhinav Java, Pragyan Banerjee, Simra Shahid, Sumit Bhatia, Kokil Jaidka EMNLP, 2024 Model Fairness Large Language Models |

|

Pragyan Banerjee*, Abhinav Java*, Surgan Jandial*, Simra Shahid *, Shaz Furniturewala, Balaji Krishnamurthy, Sumit Bhatia AAAI, 2024 Model Fairness Large Language Models |

|

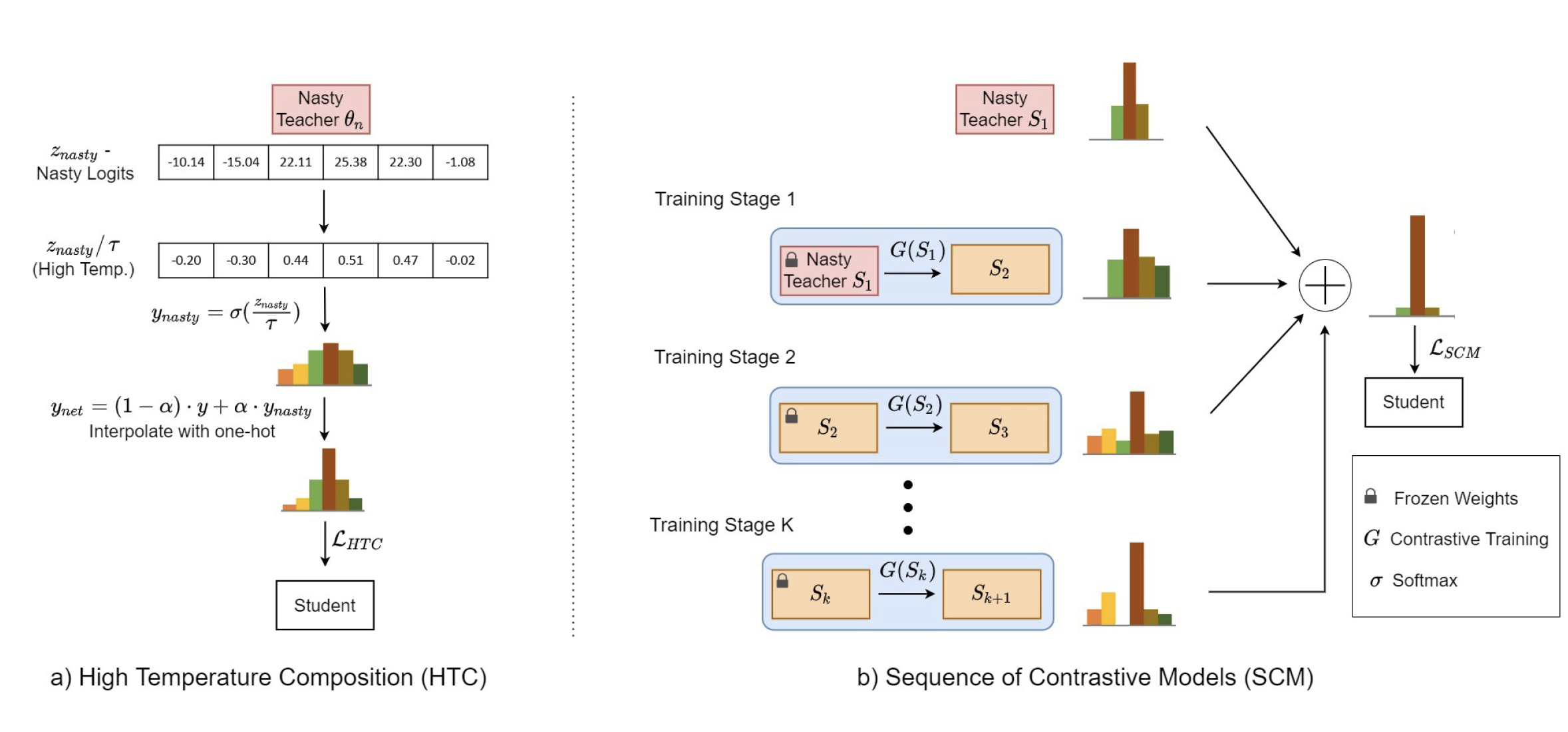

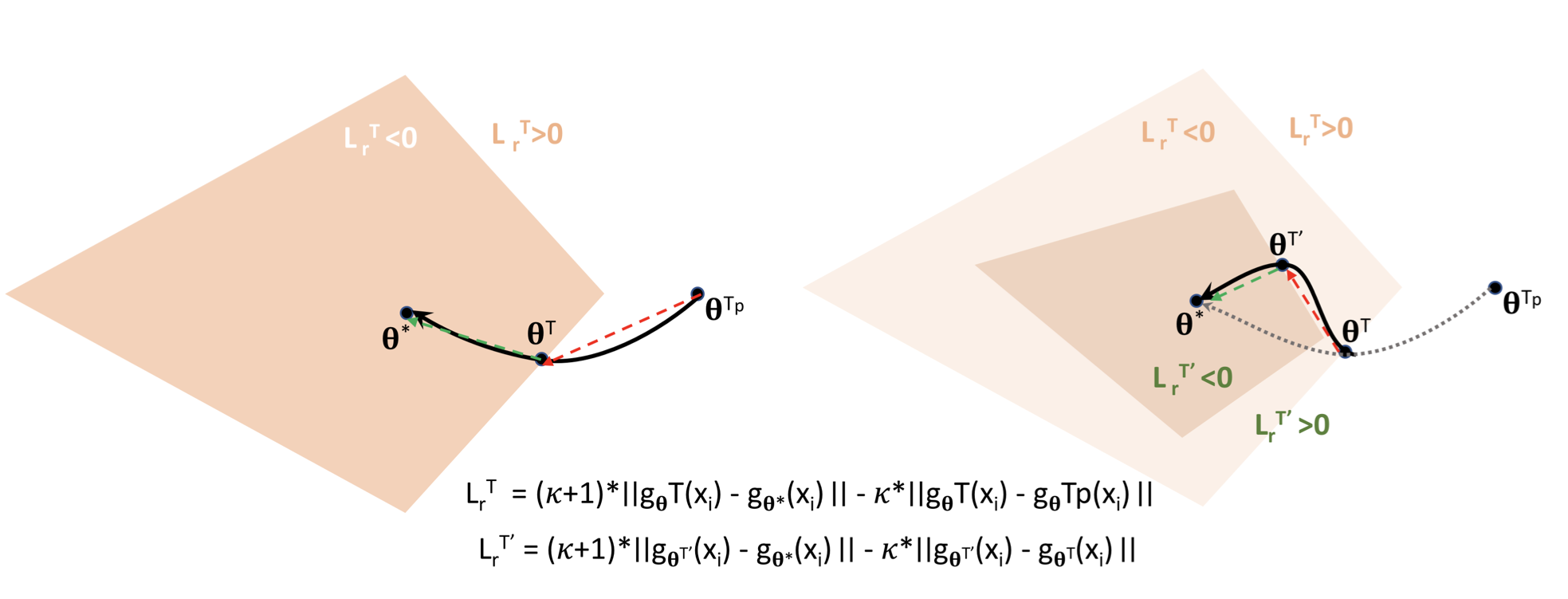

Surgan Jandial, Yash Khasbage, Arghya Pal, Vineeth N Balasubramanian, Balaji Krishnamurthy ECCV, 2022 Knowledge Distillation Model Stealing Model Security

|

|

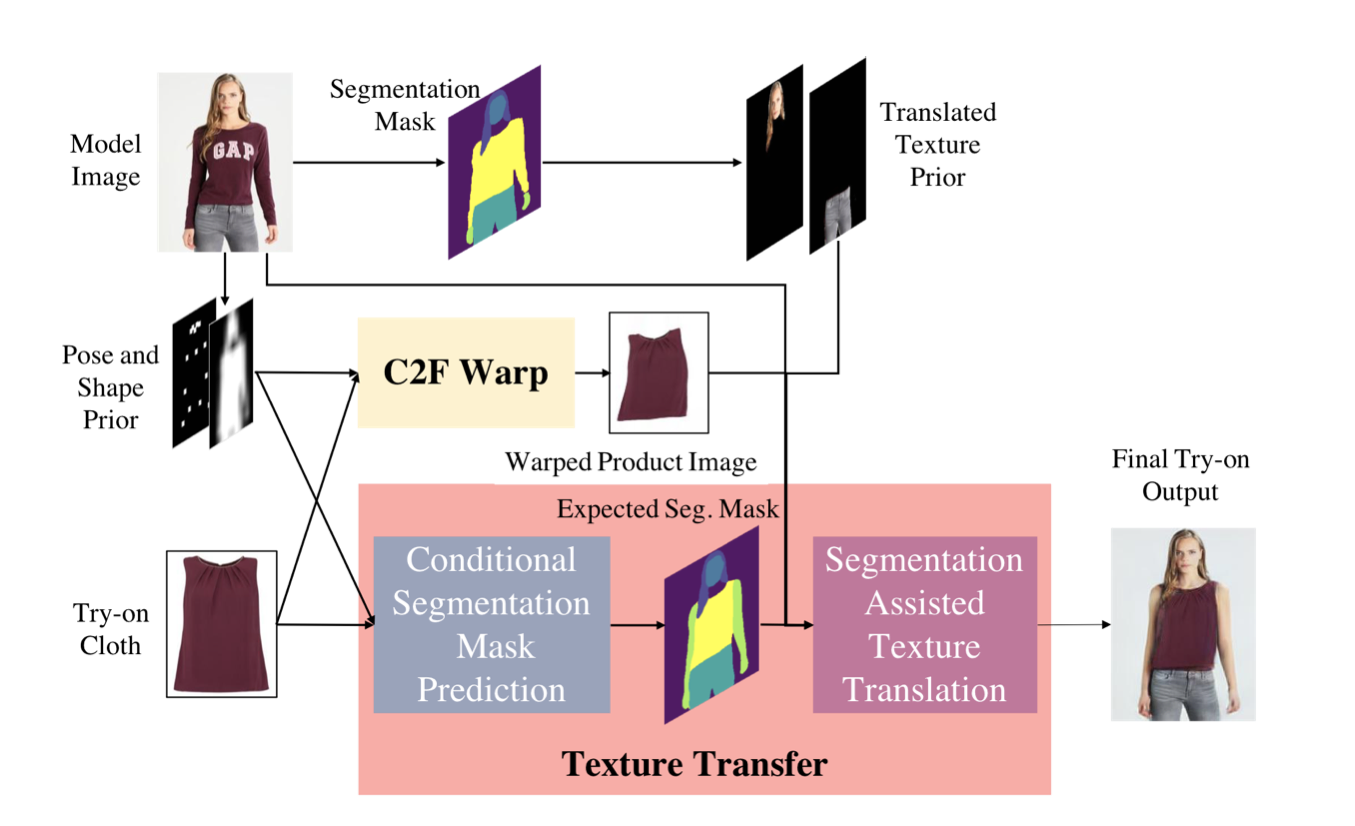

Surgan Jandial*, Pinkesh Badjatiya*, Pranit Chawla*, Ayush Chopra*, Mausoom Sarkar, Balaji Krishnamurthy WACV, 2022 Applications Computer Vision Vision Language Models |

|

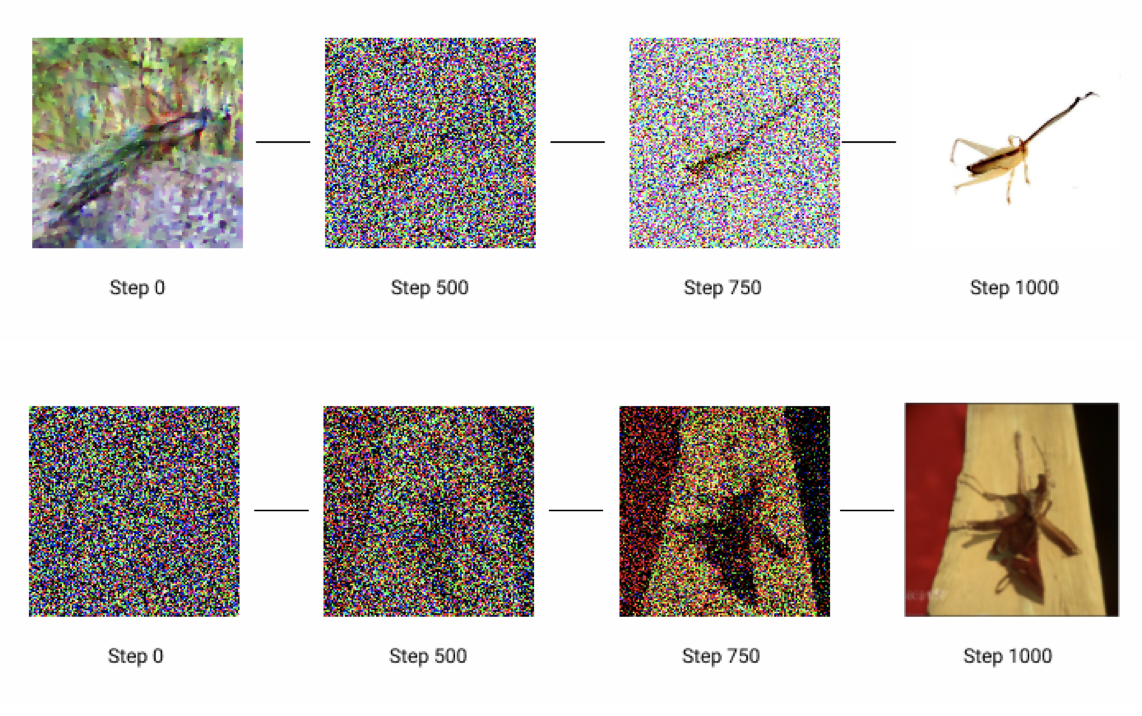

Surgan Jandial*, Ayush Chopra*, Mausoom Sarkar, Piyush Gupta, Balaji Krishnamurthy, Vineeth N Balasubramanian KDD, 2020 Efficient Model Training |

|

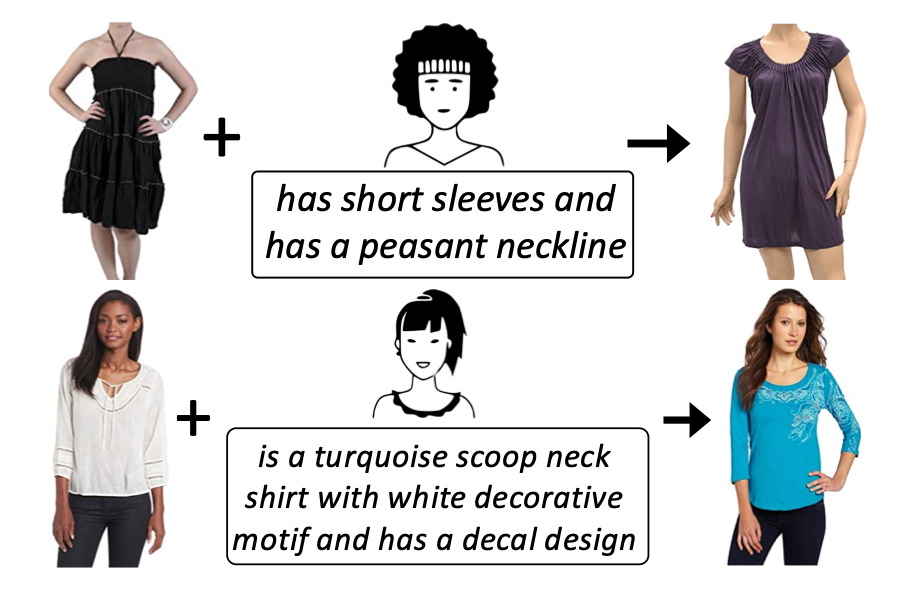

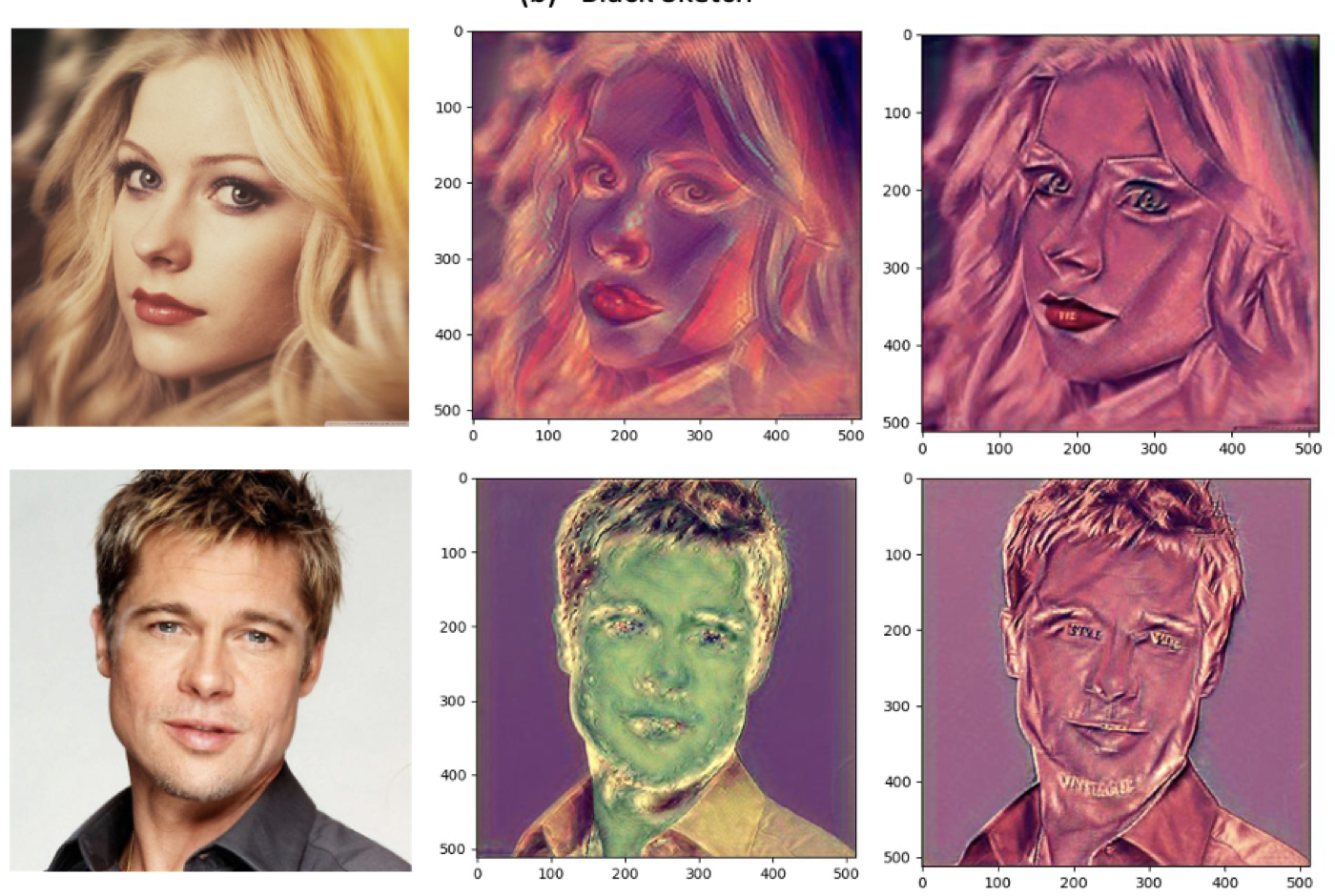

Surgan Jandial*, Ayush Chopra*, Kumar Ayush*, Mayur Hemani, Balaji Krishnamurthy, Abhijeet Halwai WACV, 2020 Also presented at Workshop on AI for Content Creation, CVPR 2020 Media Coverage: Venturebeat / Beebom / WWD Applications Computer Vision |

|

|

|

|

Surgan Jandial, Shripad Deshmukh, Abhinav Java, Simra Shahid, Balaji Krishnamurthy 6th Workshop on Computer Vision for Fashion, Art, and Design, CVPR 2023 (Oral) Synthetic Data Generation Vision Language Models |

|

Vedant Singh*, Surgan Jandial *, Ayush Chopra, Siddarth Ramesh, Balaji Krishnamurthy, Vineeth N Balasubramanian Workshop on AI for Content Creation, CVPR 2022 , AK Tweeted :) Synthetic Data Generation |

|

|

|

|

|

this made my life easy. |